FastDepth: Fast Monocular Depth Estimation on Embedded Systems

- Diana Wofk*

- Fangchang Ma*

- Tien-Ju Yang

- Sertac Karaman

- Vivienne Sze Massachusetts Institute of Technology

* These authors contributed equally to this work

Abstract

Depth sensing is a critical function for robotic tasks such as localization, mapping and obstacle detection. There has been a significant and growing interest in depth estimation from a single RGB image, due to the relatively low cost and size of monocular cameras. However, state-of-the-art single-view depth estimation algorithms are based on fairly complex deep neural networks that are too slow for real-time inference on an embedded platform, for instance, mounted on a micro aerial vehicle. In this paper, we address the problem of fast depth estimation on embedded systems. We propose an efficient and lightweight encoder-decoder network architecture and apply network pruning to further reduce computational complexity and latency. In particular, we focus on the design of a low-latency decoder. Our methodology demonstrates that it is possible to achieve similar accuracy as prior work on depth estimation, but at inference speeds that are an order of magnitude faster. Our proposed network, FastDepth, runs at 178 fps on an NVIDIA Jetson TX2 GPU and at 27 fps when using only the TX2 CPU, with active power consumption under 10 W. FastDepth achieves close to state-of-the-art accuracy on the NYU Depth v2 dataset. To the best of the authors' knowledge, this paper demonstrates real-time monocular depth estimation using a deep neural network with the highest throughput on an embedded platform that can be carried by a micro aerial vehicle.

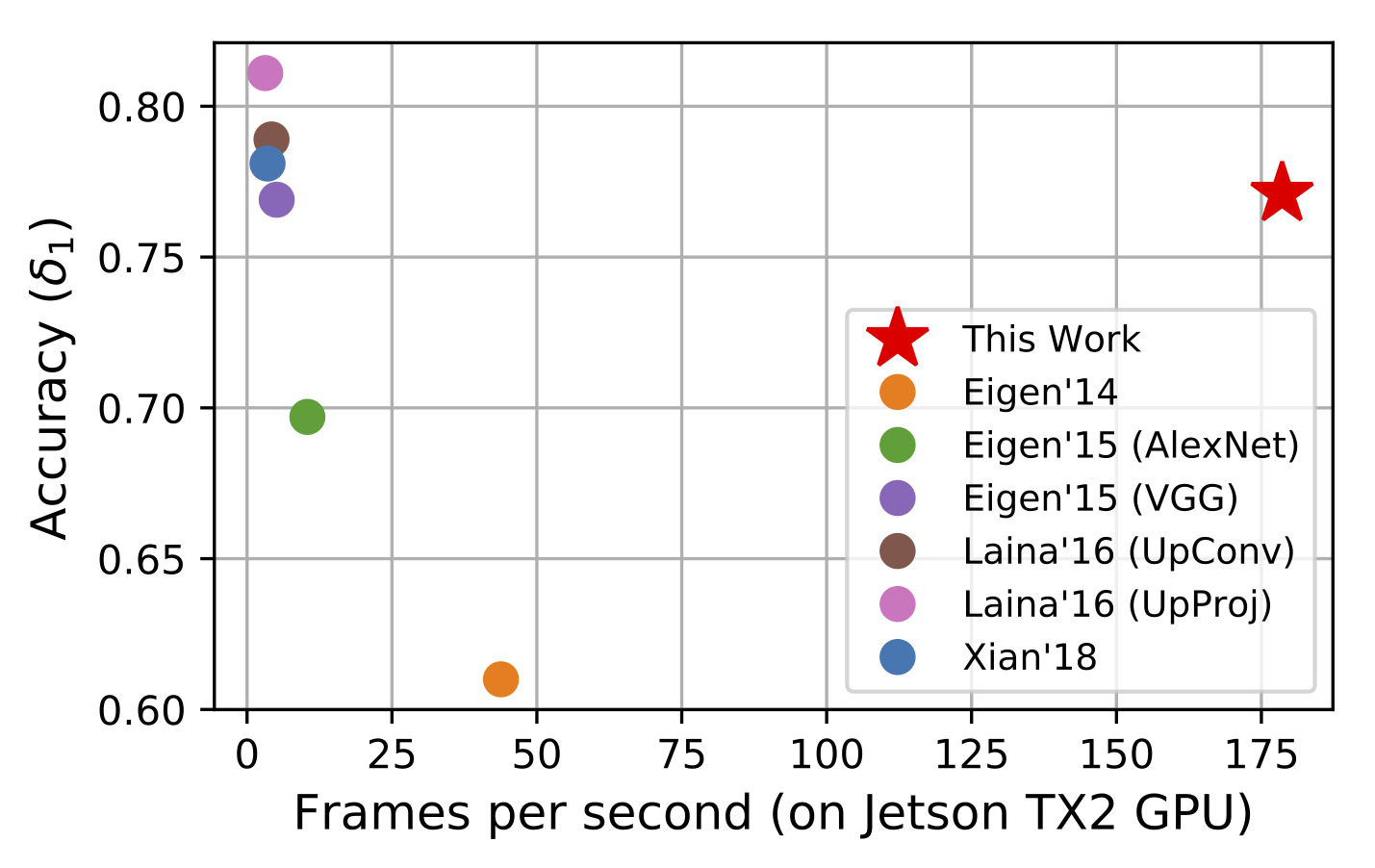

Accuracy versus speed tradeoff

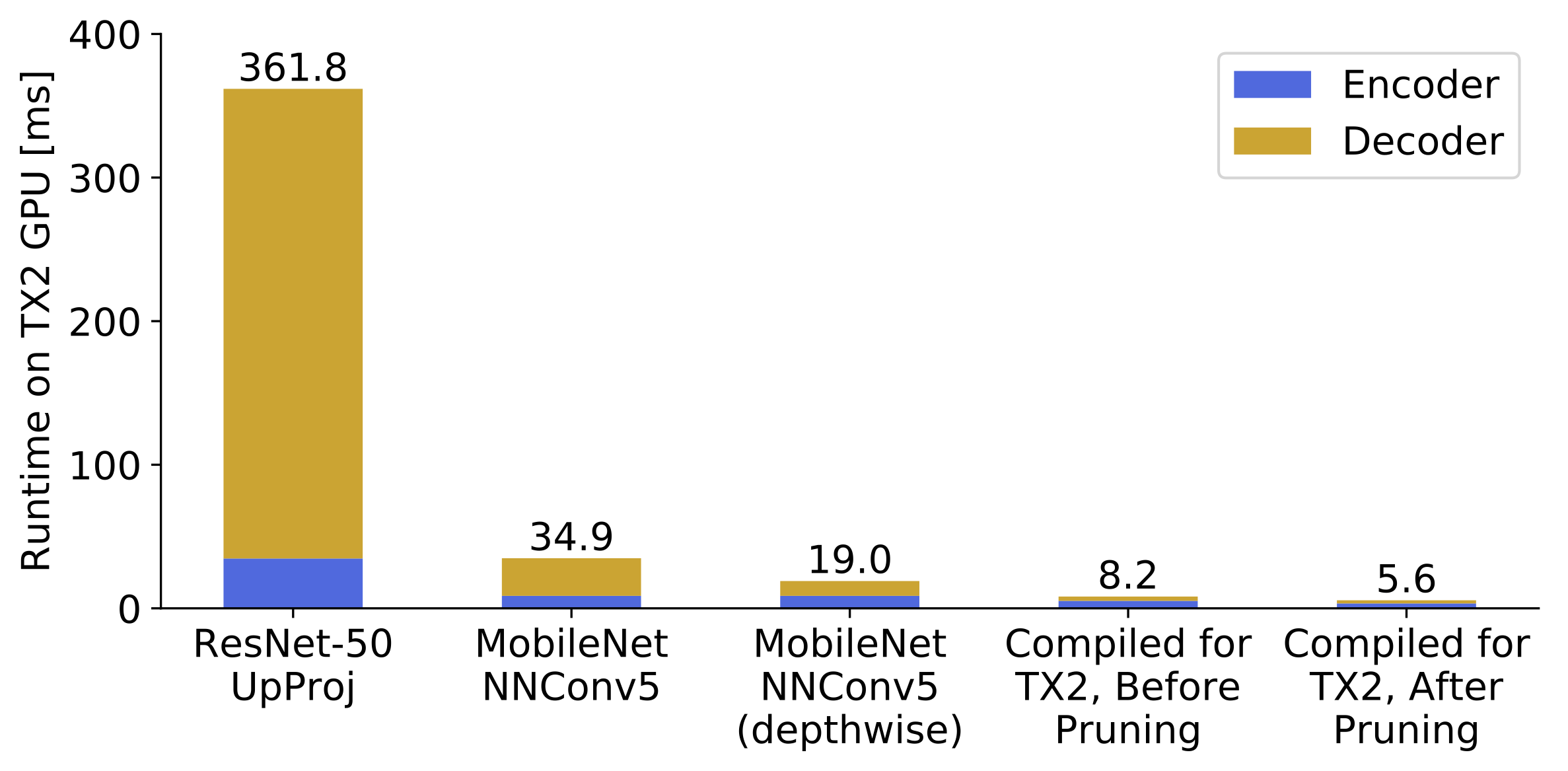

Impact of Optimizations

Video

Downloads

BibTeX

@inproceedings{icra_2019_fastdepth,

author = {{Wofk, Diana and Ma, Fangchang and Yang, Tien-Ju and Karaman, Sertac and Sze, Vivienne}},

title = {{FastDepth: Fast Monocular Depth Estimation on Embedded Systems}},

booktitle = {{IEEE International Conference on Robotics and Automation (ICRA)}},

year = {{2019}}

}